ngrep2curl - How to replay a browser web request

Many times, I found myself downloading a file twice because Cookies or some other required browser headers prevent curl from doing a direct download from the command line.

To simplify this process, I wrote a script that:

1) Listens on port 80 to grab the http headers for a download on a browser

2) Generates a curl command using the captured headers.

Old workflow (file transfered twice):

Web Browser -> browse to file -> download to local machine

sftp to remote server

New workflow (file transfered just once):

run ngrep2curl on local machine

Web Browser -> browse to file -> download just the beginning of the file -> cancel download

run the generated curl command on remote server

#!/bin/sh

ngrep port 80 -Wbyline |grep -vE '^#|^T '|head -20|sed 's/\.$//'|awk '/GET/{url=$2} /Host:/{host=$2} /User-Agent:/{gsub("User-Agent: ","");ua=$0} /Cookie:/{gsub("Cookie: ","");cookie=$0} /Referer:/{gsub("Referer: ","");ref=$0} /Accept:/{accept=$0} END{print "curl -L","\x27"host url"\x27","-A","\""ua"\"","-b\""cookie"\"","-e \x27"ref"\x27","-H","\x27"accept"\x27"}'

Wednesday, November 23, 2011

Monday, September 26, 2011

High Performance Text Processing -Taco Bell style

Following the Taco Bell programming methodology, we will process a huge amount of data using only a few simple ingredients (i.e. unix command line tools).

Most people won't think twice about writing thousands of lines of code to accomplish what a line or two of bash script will handle.

Some anti-pattterns to avoid come to mind:

NIH (Not Invented Here)

Golden Hammer (Treat every new problem like it's a nail.)

Re-inventing the wheel

Text processing is composed of four primary activities. Filtering, Transforming, Sorting and Joining.

To achieve the fastest processing speed, you should try grouping all of your filtering, transforming and joining tasks together in one pipeline.

Stream processing tasks (filtering, transforming, joining) are limited by disk io only so take advantage of your disk scan and apply all operations as co-routines at the time you read the file.

Lets say I need to apply 5 regular expressions to a file:

Example (as co-routines- equally fast ways):

time cat bigfile \

|grep -vE "[^a-z0-9 ][^a-z0-9 ]|[^a-z0-9] [^a-z0-9]||\. |[a-z]' " \

> bigfile.clean

OR

time cat bigfile \

|grep -v '[^a-z0-9 ][^a-z0-9 ]' \

|grep -v '[^a-z0-9] [^a-z0-9]' \

|grep -v '' \

|grep -v '\. ' \

|grep -v "[a-z]' " \

> bigfile.clean

Another example (same thing- but 5 times slower):

time cat bigfile|grep -v '[^a-z0-9 ][^a-z0-9 ]'>tmpfile1

time cat tmpfile1|grep -v '[^a-z0-9] [^a-z0-9]'>tmpfile2

time cat tmpfile2|grep -v '' >tmpfile3

time cat tmpfile3|grep -v '\. '>tmpfile4

time cat tmpfile4|grep -v "[a-z]' " >bigfile.clean

Using temp files here causes the equivalent of 5 full text scans on the data when you should really only be reading the data once.

Most people won't think twice about writing thousands of lines of code to accomplish what a line or two of bash script will handle.

Some anti-pattterns to avoid come to mind:

NIH (Not Invented Here)

Golden Hammer (Treat every new problem like it's a nail.)

Re-inventing the wheel

Text processing is composed of four primary activities. Filtering, Transforming, Sorting and Joining.

To achieve the fastest processing speed, you should try grouping all of your filtering, transforming and joining tasks together in one pipeline.

Stream processing tasks (filtering, transforming, joining) are limited by disk io only so take advantage of your disk scan and apply all operations as co-routines at the time you read the file.

Lets say I need to apply 5 regular expressions to a file:

Example (as co-routines- equally fast ways):

time cat bigfile \

|grep -vE "[^a-z0-9 ][^a-z0-9 ]|[^a-z0-9] [^a-z0-9]|

OR

time cat bigfile \

|grep -v '[^a-z0-9 ][^a-z0-9 ]' \

|grep -v '[^a-z0-9] [^a-z0-9]' \

|grep -v '

time cat bigfile|grep -v '[^a-z0-9 ][^a-z0-9 ]'>tmpfile1

time cat tmpfile1|grep -v '[^a-z0-9] [^a-z0-9]'>tmpfile2

time cat tmpfile2|grep -v '

time cat tmpfile3|grep -v '\. '>tmpfile4

time cat tmpfile4|grep -v "[a-z]' " >bigfile.clean

Using temp files here causes the equivalent of 5 full text scans on the data when you should really only be reading the data once.

Friday, September 16, 2011

Wednesday, September 07, 2011

Select 'Text Processing' from UNIX like 'a BOSS'

1) Learn the commands, avoid re-inventing wheels

Bootstrap method:

First, read every man page and understand that each command can be fed into any other command. There are around 2000 commands on a typical UNIX box but you only need to know a few hundred of these. If you have a specific task you can usually figure out which commands to use with the "apropos" command

Read the entire man page for important commands but just skim the top description for the others (Like encryption or security programs)

Here's how to get a list of commands available on your system (logged as root):

(The script command will screen capture into a file named typescript)

Press the tab key twice to see available commands-

#script

-tab- -tab-

Display all 2612 possibilities? (y or n)

y

! icc2ps ppmtobmp

./ icclink ppmtoeyuv

411toppm icctrans ppmtogif

7za iceauth ppmtoicr

: icnctrl ppmtoilbm

Kobil_mIDentity_switch icontopbm ppmtojpeg

MAKEDEV iconv ppmtoleaf

--More--

...

#ctl-d

# exit

Script done, file is typescript

Get rid of dos newlines

#unix2dos typescript

Manually cleanup "--More--" if needed using emacs or vi editor.

#emacs typescript

Loop over the commands and keep only commands with a man page

# (for k in `cat typescript`; do echo -n "$k="; man $k|wc -l ; echo; done 2>/dev/null)|sort|grep '=[1-9]' >command.list

Look over the list

awk=1612

badblocks=119

baobab=28

base64=60

basename=52

bash=4910

batch=177

bc=794

bccmd=116

bdftopcf=71

2) Start "Reading The Fine Manpages"

# man man

man(1) Manual pager utils man(1)

NAME

man - an interface to the on-line reference manuals

SYNOPSIS

man [-c|-w|-tZHT device] [-adhu7V] [-m system[,...]] [-L locale] [-p string] [-M path] [-P pager] [-r prompt] [-S list] [-e extension] [[section] page ...] ...

man -l [-7] [-tZHT device] [-p string] [-P pager] [-r prompt] file ...

man -k [apropos options] regexp ...

man -f [whatis options] page ...

DESCRIPTION

man is the system’s manual pager. Each page argument given to man is normally the name of a program, utility or function. The manual page associated with each of these arguments is then found and displayed. A section, if provided, will direct man to look only in that section of the manual. The default action is to search in all of the available sections, following a pre-defined order and to show only the first page found, even if page exists in several sections.

...

Commands:

ENTER – scroll down

”b” – take you back

”/ keyword” – search the man page for a word

asumming more as page reader

Finding Commands:

# apropos CPU

top (1) - display top CPU processes

# whatis apropos

apropos (1) - search the manual page names and descriptions

NOTE: apropos is man -k (for ”keyword search”) and whatis is man -f

(for ”fullword search”)

3) Study and practice combining these key text processing tools:

I can't even tell you how important this is.

AWK, SED, TR, GREP, SORT, UNIQ, CUT,PASTE,JOIN, HEAD, TAIL, CAT, TAC

4) Learn how to manage running processes

PS, TOP, FG, BG, KILL, vmstat, iostat, netstat, lsof, fuser, strace

ctrl-d

ctrl-c

ctrl-z

5) Learn the BASH shell

UNIX pipes combine and process streams:

| Integer value | Name |

|---|---|

| 0 | Standard Input (stdin) |

| 1 | Standard Output (stdout) |

| 2 | Standard Error (stderr) |

6) Keep going

Check out history, wget, curl, xml2, xmlformat and GNU parallel.. tons more....

(You'll be glad you did)

Friday, September 02, 2011

NCD for news article data mining

The Normalized Compression Distance (NCD) is a powerful formula that can be used to discover hidden relationships in almost any data.

C=compressor program like Zip

x and y are the 2 strings you want to compare

I works by simply compressing three things. The first text string C(x), the second C(y) and then the concatenation both strings together c(xy).

Apply the formula and you get a distance score between the 2 strings from 0 to 1 (plus a slight error amount). 0 means the articles are identical, and 1 means they are absolutely dissimilar.

NCD was discovered by Rudi Cilibrasi and Paul Vitanyi as described in thier 2005 paper "Clustering by Compression"

http://arxiv.org/PS_cache/cs/pdf/0312/0312044v2.pdf

Consider the following article:

C=compressor program like Zip

x and y are the 2 strings you want to compare

I works by simply compressing three things. The first text string C(x), the second C(y) and then the concatenation both strings together c(xy).

Apply the formula and you get a distance score between the 2 strings from 0 to 1 (plus a slight error amount). 0 means the articles are identical, and 1 means they are absolutely dissimilar.

NCD was discovered by Rudi Cilibrasi and Paul Vitanyi as described in thier 2005 paper "Clustering by Compression"

http://arxiv.org/PS_cache/cs/pdf/0312/0312044v2.pdf

Consider the following article:

What If He Had Gone on Vacation-

Jack Kilby describes how he developed the world's first integrated circuits.

"After several interviews, I was hired by Willis Adcock of Texas Instruments. My duties were not precisely defined, but it was understood that I would work in the general area of microminiaturization. Soon after starting at TI in May 1958, I realized that since the company made transistors, resistors, and capacitors, a repackaging effort might provide an effective alternative to the Micro-Module. I therefore designed an IF amplifier using components in a tubular format and built a prototype. We also performed a detailed cost analysis, which was completed just a few days before the plant shut down for a mass vacation.

"As a new employee, I had no vacation time coming and was left alone to ponder the results of the IF amplifier exercise. The cost analysis gave me my first insight into the cost structure of a semiconductor house. The numbers were high — very high — and I felt it likely that I would be assigned to work on a proposal for the Micro-Module program when vacation was over unless I came up with a good idea very quickly. In my discouraged mood, I began to feel that the only thing a semiconductor house could make in a cost-effective way was a semiconductor. Further thought led me to the conclusion that semiconductors were all that were really required — that resistors and capacitors, in particular, could be made from the same material as the active devices.

"I also realized that, since all of the components could be made of a single material, they could also be made in situ, interconnected to form a complete circuit. I then quickly sketched a proposed design for a flip-flop using these components. Resistors were provided by bulk effect in the silicon, and capacitors by p-n junctions.

"These sketches were quickly completed, and I showed them to Adcock upon his return from vacation. He was enthused but skeptical and asked for some proof that circuits made entirely of semiconductors would work. I therefore built up a circuit using discrete silicon elements. Packaged grown-junction transistors were used. Resistors were formed by cutting small bars of silicon and etching to value. Capacitors were cut from diffused silicon power transistor wafers, metallized on both sides. This unit was assembled and demonstrated to Adcock on August 28, 1958.

"Although this test showed that circuits could be built with all semiconductor elements, it was not integrated. I immediately attempted to build an integrated structure as initially planned. I obtained several wafers, diffused and with contacts in place. By choosing the circuit, I was able to lay out two structures that would use the existing contacts on the wafers. The first circuit attempted was a phase-shift oscillator, a favorite demonstration vehicle for linear circuits at that time.

"On September 12, 1958, the first three oscillators of this type were completed. When power was applied, the first unit oscillated at about 1.3 megacycles.

"The concept was publicly announced at a press conference in New York on March 6, 1959. Mark Shepherd said, "I consider this to be the most significant development by Texas Instruments since we divulged the commercial availability of the silicon transistor." Pat Haggerty predicted the circuits first would be applied to the further miniaturization of electronic computers, missiles, and space vehicles and said that anyapplication to consumer goods such as radio and television receivers would be several years away."

A television program in 1997 said about the integrated circuit and Jack Kilby, "One invention we can say is one of the most significant in history -- the microchip, which has made possible endless numbers of other inventions. For the past 40 years, Kilby has watched his invention change the world. Jack Kilby — one of the few people who can look around the globe and say to himself 'I changed how the world functions.'"

Can NCD work as a relationship classifier in news articles?

First, I ran a part of speech tagger on the article text and then looked-up all entities (proper nouns) in Wikipedia. The Wikipedia articles were then used to build a related word-list for each entity. Next I compute the normalized compression distance (NCD) pair-wise for each entity to get a distance matrix. Cluster the matrix and a nice binary graph appears using dot and the relationships begin to appear.

From the 50 entities mapped below, a few relations are apparent. (Probably should have just picked the top 25 entities though, because the clustering works better with fewer items.)

A) Mark Shepherd and Willis Adcock are related through Jack Kilby

B) The integrated circuit, transistor, capacitor and resistor are related by silicon.

C) Concepts and ideas are related through thought.

Monday, August 15, 2011

Move your web content to Rackspace Cloudfiles

I 'm gonna make this quick

To start hosting your web content on Rackspace Cloudfiles, you need to complete the following tasks:

Login to the cloudfiles management console: http://manage.rackspacecloud.com

- Create Cloudfile containers to hold your web content ( plan on storing up to 10K items per container so you can use cloudfuse to mount your containers to the filesystem). You need to come up with an organizational structure that makes sense for your site. For example, you could use a container for your audio named c_audio and c_pics for pictures. If you know you will have over 10K files you should try to break them up like this: c-pics000,c-pics001, c-pics002, ...

- Click "Publish to CDN" - This will give you your CDN URL.

- Add cname entries into your DNS to create a predictable name for your containers.

- Write a PHP script to query your database and copy all content listed in your database into the cloud. Then save the new cloud location for the content back to your database. For example, if you use auto-increment and have 30 thousand files you can put the first 10k into c-pics000 container, second 10K into c-pics001 container and so on.

- For user contributed content- update your website file upload script to save the content to the cloud instead of the filesystem and save the new content location in the database. For images you may want to create the different sizes you will need before uploading to the cloud. Example: me.jpg and me_thumb.jpg

- Connect your Cloudfile containers to your filesystem with cloudfuse.

Add the following entry to /etc/fstab:

cloudfuse /mnt/cloud fuse defaults,allow_other,username=your_username,api_key=your_api_keyThen mount /mnt/cloud and you will see all your containers as directories. This is a great way to see what you have using ls and also to make backups of your cloudfiles to another hard-drive on your server.

You're done!

Linux virtualization with chroot

Big savings with virtualization (Exponential?)

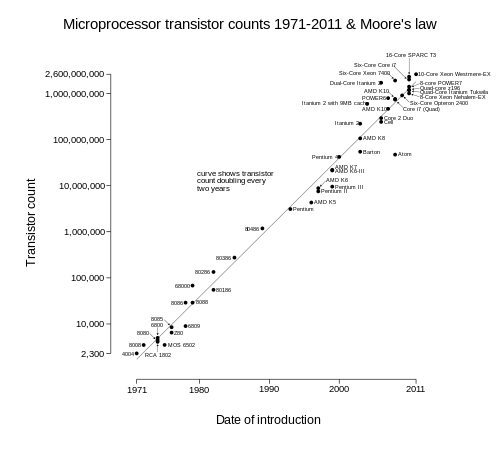

According to Moore's Law we get better, and generally cheaper servers every year. In the data center, we can take advantage of this by leasing servers instead of buying- if we are using Linux virtualization.

There are many virtualization containers that work perfectly well for Linux. The three I have used most over the years are Xen, VMware and Oracle VM VirtualBox. Each has it's own quirks you need to learn and requires a software install to use. The one disadvantage they all share though, is that they add complexity and there is a cost to switching containers. For a pure linux shop there is a simpler way to virtualize without adding software.

As long as the host and the virtualized OS will run on the same kernel, you don't need any special software to virtualize in Linux. Using chroot, you can build an OS inside an OS. I currently run CentOS as the host OS and Gentoo for my virtualized instances.

To copy it to a new leased machine in a year once prices have dropped and CPU cores have doubled:

Stop the running programs (whatever you put into start_gentoo.sh) so that you don't copy things like your database tables during a write.

#tar cvfz /mnt/gentoo/gentoo.20110815.tar.gz /mnt/gentoo

You can now ftp this to your new server, make a new /mnt/gentoo directory, untar the tar ball, setup your start script and /etc/rc.local and you are up and running on your new, faster machine.

#chroot /mnt/gentoo

So that's it! I have had this setup up and working for the past 5 years and every year I change to new servers it gets easier (Hint- if you use the cloud to host your web content, upgrading to new servers gets even easier- see my next post on this topic).

There are many virtualization containers that work perfectly well for Linux. The three I have used most over the years are Xen, VMware and Oracle VM VirtualBox. Each has it's own quirks you need to learn and requires a software install to use. The one disadvantage they all share though, is that they add complexity and there is a cost to switching containers. For a pure linux shop there is a simpler way to virtualize without adding software.

Good old chroot will do the trick

As long as the host and the virtualized OS will run on the same kernel, you don't need any special software to virtualize in Linux. Using chroot, you can build an OS inside an OS. I currently run CentOS as the host OS and Gentoo for my virtualized instances.

Here is how I set it up:

# cat /etc/redhat-release

CentOS release 5.5 (Final)

#mkdir /mnt/gentoo

#cd /mnt/gentoo

First: Download a tar ball of a running Gentoo OS and unpack it

#wget http://distro.ibiblio.org/pub/linux/distributions/gentoo/releases/amd64/autobuilds/current-install-amd64-minimal/stage3-amd64-20110811.tar.bz2

#bunzip2 stage3-amd64-20110811.tar.bz2

#tar xvf stage3-amd64-20110811.tar

Next: Setup networking and devices, copy these 3 lines into the /etc/rc.local of your host OS (CentOS in my case)

mount -t proc none /mnt/gentoo/proc

cp -L /etc/resolv.conf /mnt/gentoo/etc/resolv.conf

chroot /mnt/gentoo su -c '/etc/init.d/start_gentoo.sh' -

Last: add a shell script into your virtualized OS (/mnt/gentoo) to start your webservers, databases and any other programs you need to run on a reboot.

To login to your new virtualized server

#chroot /mnt/gentoo

# cat /etc/redhat-release

CentOS release 5.5 (Final)

#mkdir /mnt/gentoo

#cd /mnt/gentoo

First: Download a tar ball of a running Gentoo OS and unpack it

#wget http://distro.ibiblio.org/pub/linux/distributions/gentoo/releases/amd64/autobuilds/current-install-amd64-minimal/stage3-amd64-20110811.tar.bz2

#bunzip2 stage3-amd64-20110811.tar.bz2

#tar xvf stage3-amd64-20110811.tar

Next: Setup networking and devices, copy these 3 lines into the /etc/rc.local of your host OS (CentOS in my case)

mount -t proc none /mnt/gentoo/proc

cp -L /etc/resolv.conf /mnt/gentoo/etc/resolv.conf

chroot /mnt/gentoo su -c '/etc/init.d/start_gentoo.sh' -

Last: add a shell script into your virtualized OS (/mnt/gentoo) to start your webservers, databases and any other programs you need to run on a reboot.

To login to your new virtualized server

#chroot /mnt/gentoo

Come back in 1 year

To copy it to a new leased machine in a year once prices have dropped and CPU cores have doubled:

Stop the running programs (whatever you put into start_gentoo.sh) so that you don't copy things like your database tables during a write.

#tar cvfz /mnt/gentoo/gentoo.20110815.tar.gz /mnt/gentoo

You can now ftp this to your new server, make a new /mnt/gentoo directory, untar the tar ball, setup your start script and /etc/rc.local and you are up and running on your new, faster machine.

#chroot /mnt/gentoo

Improving a good thing

So that's it! I have had this setup up and working for the past 5 years and every year I change to new servers it gets easier (Hint- if you use the cloud to host your web content, upgrading to new servers gets even easier- see my next post on this topic).

Now I am planning to switch my setup from Gentoo to Ubuntu because Ubuntu has better support in the community. I will write that up once I get it working (I started using Ubuntu initially because it is a source distribution, and due to that, there are tar balls that unpack and work easily in chroot). If anyone has tips on how to get Ubuntu running in a chroot environment, please leave a comment.

Subscribe to:

Posts (Atom)